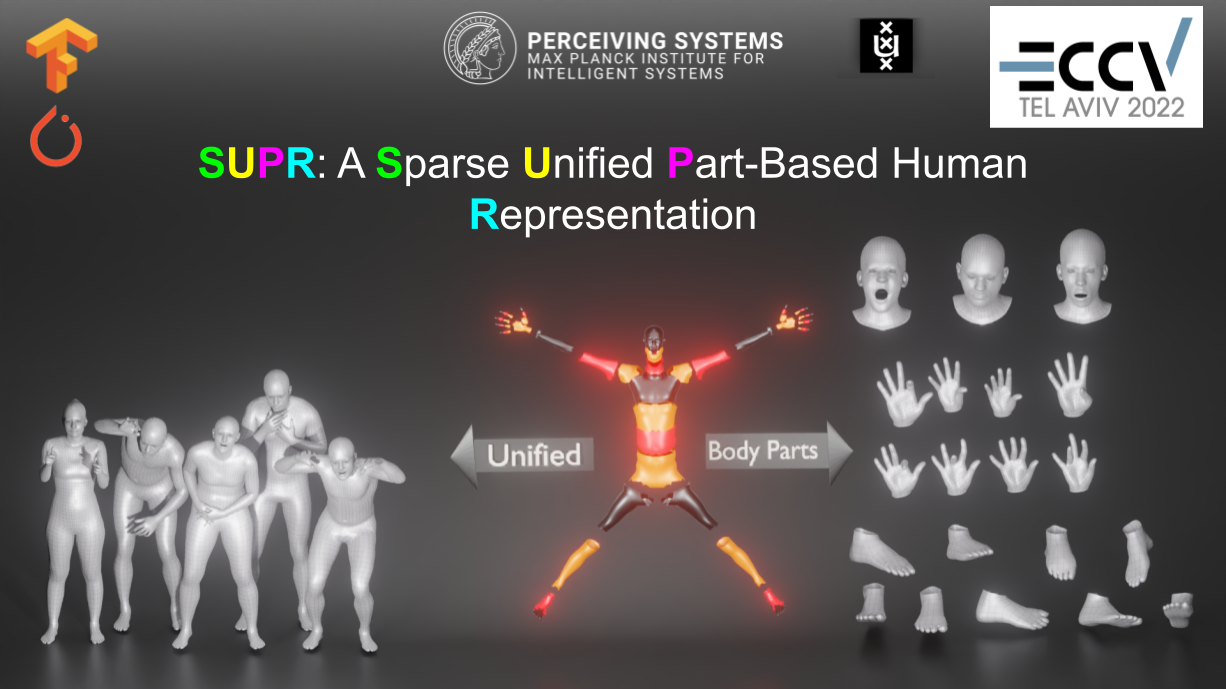

SUPR: A Sparse Unified Part-Based Human Representation

Ahmed A. A. Osman, Timo Bolkart, Dimitrios Tzionas and Michael J. Black

ECCV 2022

SUPR is a factorized representation of the human body that can be separated into a full suite of body part models, including a novel human foot model with learned contact deformations. In contrast to existing body part models such as FLAME and MANO, the separated body part models are able to capture the head and hand full range of motion.

Abstract

Statistical 3D shape models of the head, hands, and fullbody are widely used in computer vision and graphics. Despite their wide use, we show that existing models of the head and hands fail to capture the full range of motion for these parts. Moreover, existing work largely ig- nores the feet, which are crucial for modeling human movement and have applications in biomechanics, animation, and the footwear industry. The problem is that previous body part models are trained using 3D scans that are isolated to the individual parts. Such data does not capture the full range of motion for such parts, e.g. the motion of head relative to the neck. Our observation is that full-body scans provide important in- formation about the motion of the body parts. Consequently, we propose a new learning scheme that jointly trains a full-body model and specific part models using a federated dataset of full-body and body-part scans. Specifically, we train an expressive human body model called SUPR (Sparse Unified Part-Based Representation), where each joint strictly influences a sparse set of model vertices. The factorized representation enables separating SUPR into an entire suite of body part models: an expressive head (SUPR-Head), an articulated hand (SUPR-Hand), and a novel foot (SUPR-Foot). Note that feet have received little attention and existing 3D body models have highly under-actuated feet. Using novel 4D scans of feet, we train a model with an extended kinematic tree that captures the range of motion of the toes. Additionally, feet de- form due to ground contact. To model this, we include a novel non-linear deformation function that predicts foot deformation conditioned on the foot pose, shape, and ground contact. We train SUPR on an unprece- dented number of scans: 1.2 million body, head, hand and foot scans. We quantitatively compare SUPR and the separate body parts to existing expressive human body models and body-part models and find that our suite of models generalizes better and captures the body parts’ full range of motion. SUPR is publicly available for research purposes.

Models

We release a total of 18 models

SUPR ( Male, Female and Gender Neutral)

SUPR an expressive human body model with a fully articulated head, hands and feet:

- A total of 75 joints, including 20 joints for the toes articulation.

- Sparse spatially local deformations.

SUPR-Head ( Male, Female and Gender Neutral)

- Same topology as FLAME, but trained on more data.

- Sparse spatially local deformations.

- Pose space, shape space and expression space.

SUPR-Hand ( Male, Female and Gender Neutral)

- Same topology as MANO, but trained on more data.

- Sparse spatially local deformations.

- Pose space and shape space.

- Contrasined kinematic tree for the fingers.

SUPR-Foot ( Male, Female and Gender Neutral)

- Sparse spatially local deformations.

- Pose space and shape space.

- Learned ground contact deformations.

News

- 15-03-2023: Utility code to convert SMPL-X bodies to SUPR bodies released on GitHub.

- 24-10-2022: The foot models are available for download.

- 24-10-2022: The code (in Tensorflow and PyTorch) to access the models is now publicly available on GitHub.

- 20-10-2022: The body models are available for download.

Paper

Poster

Reference

When using SUPR please reference:

@inproceedings{SUPR:2022,

author = {Osman, Ahmed A A and Bolkart, Timo and Tzionas, Dimitrios and Black, Michael J.},

title = {{SUPR}: A Sparse Unified Part-Based Human Body Model},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2022},

url = {https://supr.is.tue.mpg.de}

}